Illustration: ICJK 2023-11-08

Illustration: ICJK 2023-11-08

The campaign leading up to the 2023 Slovak parliamentary elections will make history. Probably for the first time, deepfakes, i.e. computer-generated audio and video, were a part of the pre-election battle. Two days before the vote, alleged footage that appeared to be of Denník N journalist Monika Tódová and the chairman of Progressive Slovakia, Michal Šimečka, circulated on social networks and via chain emails. There was just one problem: The “recorded” conversation never happened. Tens of thousands of people heard it anyway.

From where did it start spreading and on what platforms did it appear? Who published it and what impact did the artificially created conversation have on the outcome of the elections? We sought answers to these questions in an investigative piece that is part of the Safe.journalism.SK project, the aim of which is to monitor attacks against journalists and expose their perpetrators.

The journalist Monika Tódová has never spoken to the politician Michal Šimečka about the manipulation of the elections. But in a short video spreading just a few days before the Slovak election, they were heard talking about it. In the artificially created manipulated video, known as a deep fake, – a static image of the journalist and the politician is shown. The audio track is of poor quality and doesn’t feel authentic at first hearing, so the words are also overwritten in the subtitles. The chairman of Progressive Slovakia called the recording “colossal, blatant stupidity.” Matúš Kostolný, the editor-in-chief of Denník N, wrote that it was a deepfake that “speaks in the voice of my colleague Monika Tódová in made-up sentences.”

The inauthenticity of the video is also confirmed by investigace.cz data analyst Josef Šlerka, but it is very difficult to determine how, exactly, it was created. “Whether it was created by someone uploading it and then using artificial intelligence to dub it, or whether someone trained artificial intelligence and then created an audio file from it, it’s impossible to say,” he adds. “There isn’t as of yet a really effective, accessible and above all reliable tool for detecting AI-generated content, from audio to video to photos,” confirmed Bellingcat journalist and researcher Michael Colborne.

Nevertheless, the video, released shortly before Slovaks went to the polls, was distributed very effectively on social networks and in emails. The most successful sharers of the fake video were politicians: former Supreme Court head and ex-minister Štefan Harabin and multi-party candidate and former MP Peter Marček. Apart from their pro-Kremlin views, they also share common activities. Most recently, a few days ago, they appeared in front of the presidential palace to protest Zuzana Čaputová’s decision to not appoint Rudolf Huliak as environment minister.

Tracing the path of the deepfake video is very difficult. The fact that it first started spreading from Telegram made the analysis of its origin even more difficult. Our findings show that the fake video initially became publicly widespread after being shared by Štefan Harabin’s Telegram account. However, he was not the first to publish it. He forwarded it from an account named “Gabika Ha”. Since it is a hidden private account, the trace of the initial dissemination of the video ends here. Štefan Harabin did not answer the question of whether the account could be associated with his wife Gabriela Harabinová, a name that bears some similarity to “Gabika Ha.”

“We can’t identify patient zero,” said investigace.cz’s Josef Šlerka, who also heads the New Media Studies department at the Faculty of Arts at Charles University. If the content is shared by a Telegram group, the origin can be traced, but if it is a hidden personal profile, as in the case of Gabik Ha’s account, more cannot be found out, the expert explained.

The fake video appeared on Telegram, but gained a bigger reach after it was shared on Facebook on Petr Marček’s profile. Experts estimate that up to 100 thousand users may have seen it. The spread of content on Facebook is greater, as it is the most widespread social media network in Slovakia. According to Statista data, Facebook has about 3.5 million users in Slovakia.

The spread of manipulative and fake content can also undermine the credibility of democratic elections, according to experts. A new survey by the Central European Digital Media Observatory (CEDMO) found that up to 54 percent of voters across the Slovak population feared election fraud. Several political parties, most notably SMER and Republika, used the subject of possible election manipulation in their campaigns.

At the same time as the fake video of journalist Tódová and PS leader Šimečka started spreading through Slovak social platforms, the head of the Russian secret service, Sergei Naryškin, was also talking about the manipulation of the Slovak elections. During the two-day pre-election moratorium, he accused the United States of election interference. The same narrative was also presented by Štefan Harabin on Russian state television the following day.

The footage spread first on Telegram

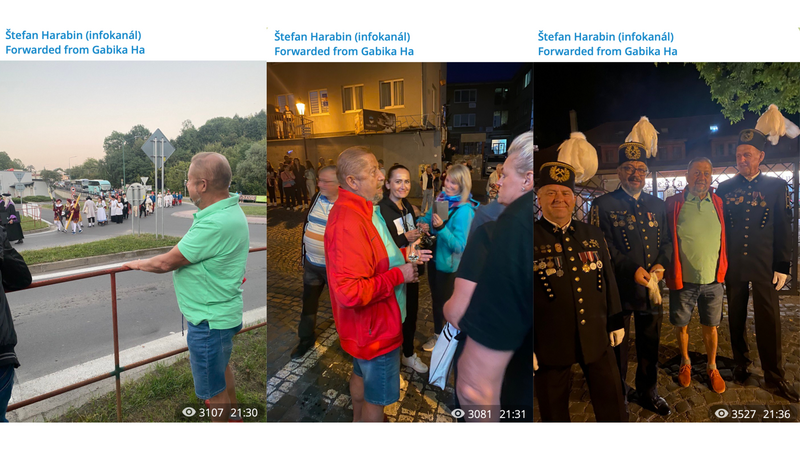

An analysis of social media platforms indicates that Telegram was most likely the original source of the distribution of the deepfake ahead of the parliamentary elections. On September 28 just after noon (12:05), the video made its way from Gabika Ha’s hidden account and onto Štefan Harabin’s public channel. According to Telegram’s statistics, the video on Harabin’s channel has been viewed by over 13,000 users and has been shared almost 500 times (including the times it was shared via private message).

Source:Telegram/Štefan Harabin

Whether Gabika Ha’s hidden account was its first source cannot be determined from publicly available data. The fake video may have previously circulated in non-public channels and groups. We tried to contact Gabika Ha’s account directly through the Telegram app, but it did not respond to our calls.

Unsuccessful attempt to call the account “Gabika Ha”. Source: ICJK

According to the reconstruction of the video’s spread by the Centre for Combating Hybrid Threats of the Ministry of the Interior, the first place where the deepfake video appeared on the Telegram was this account.

However, our findings suggest that Štefan Harabin did not share content from the account accidentally or coincidentally. The Telegram profile of the former justice minister and pro-Russian propaganda disseminator had already forwarded content from user Gabika Ha 27 times in the past.

The first time was in July 2021, and, to date, the most recent time was when he shared the deepfake on September 28. On September 9, 2023, Harabin shared more photos from Gabika Ha’s account than he’d shared from that account before or since. These are photos of Štefan Harabin meetings with fans at the Salamander Days in Banská Štiavnica. From the context of the photographs, it appears that the person who published them through Gabika Ha’s account must have accompanied Harabin throughout the day. Harabin also shared the photos via a video shared from this account after he managed to create a new account on Facebook, where he had been blocked for a long time.

Photos of Harabin from the Salamander Days. Source: Telegram / Gabika Ha

We contacted Harabin directly with questions about sharing the deepfake. “I don’t remember sharing anything like that,” he claimed in a brief phone interview. After asking if he knew who owns Gabika Ha’s account and if it was his wife, Gabriela Harabin, he hung up the phone.

The so-called metadata of the file could also help us identify where the fake video came from. Telegram is one of the last platforms that preserves this information. However, the metadata of this deep fake video only tells us the date of creation of the video file. It appears to have been made shortly before the first identified appearance on Telegram on September 28 after 10 a.m.

The fake video that spread was in line with Russia’s narrative

As the election day approached, Russian state media began to report more intensively on events in Slovakia. Even Russia’s secret services commented on them. On the day when the deepfake video of journalist Monika Tódová’s and PS chairman Michal Šimečka appeared, Russian intelligence—the Foreign Intelligence Service (SVR)—issued a press release about alleged US efforts to influence the results of the Slovak elections. Russian state news agency Ria Novosti picked up the statement at 11:43 local time.

SVR intelligence chief Sergei Naryshkin accused the United States of instructing allies to cooperate with business and political circles “to secure the American-demanded voting results.” The result should have been, “as the US expects, the victory of its proxy, the liberal party Progressive Slovakia (…)”, the Russian secret service said in a press release. The party’s chairman, Michal Šimečka, was also mentioned, and the head of Russian intelligence spoke of “coercion, blackmail, intimidation and bribery,” all of which were allegedly part of the effort to manipulate Slovakia’s election.

The Slovak foreign ministry strongly condemned these statements, criticized Russia for interfering in the elections during the pre-election campaign moratorium, and summoned the Russian ambassador. “The department of diplomacy strongly protests against the false statement of Russian intelligence which cast doubt on the integrity of the free and democratic election in Slovakia.We consider such deliberately spread disinformation to be unacceptable interference by the Russian Federation in the election process,” the ministry wrote in its statement. The embassy denied the allegations.

The allegations in the Russian intelligence statement were in line with the content of the deepfake video, which circulated at a similar time. “There are undoubtedly similar features, but at the moment we cannot prove a connection between the two cases,” Daniel Milo, head of the Interior Ministry’s Center for Combating Hybrid Threats, told Denník N.

On the evening before the elections on September 29, the Slovak elections were also the main focus of the news on Russian state television network Rossija 1. A report by Daria Grigorova was pointed out by Denník N. The content of the report was about the possibility of US manipulation of Slovak election results. It was Štefan Harabin who spoke on camera about this theory and, according to the footage, was the only one who gave an interview to the Russian propagandist.

We also wanted to ask Štefan Harabin when he gave the interview to Rossiya 1 and whether his statements were related to his decision to share the fake video on social networks. However, he has not picked up the phone since the first interview and has not answered our questions.

Daria Grigorova and Štefan Harabin. Source: Reprofoto/smotrim.ru

Facebook: centre of spread

Although the fake video first appeared on the Telegram, it reached tens of thousands of users via Facebook. According to AFP fact-checker Robert Barca, who verifies the truthfulness of information on Slovak Facebook, it spread mainly through personal accounts, “like most viral false content on Facebook.” The most successful post, according to Barca, was that of a former member of the national council, Peter Marček.

Marček is the founder of multiple political parties. He stood at the birth of political entities that later turned into Boris Kollar’s Sme Rodina party and Republika, made up of the defectors from Marian Kotleba’s ĽSNS party. Marček was later expelled from both of them.

In 2018, Marček traveled to Russian-occupied Crimea while still a member of the national council. He also met with Crimean Prime Minister Sergei Aksyonov and other representatives of the annexed territory. He also stated at the time that “Crimea is, was and will be Russian.” The European Platform for Democratic Elections also lists him in its database of politically biased election observers.

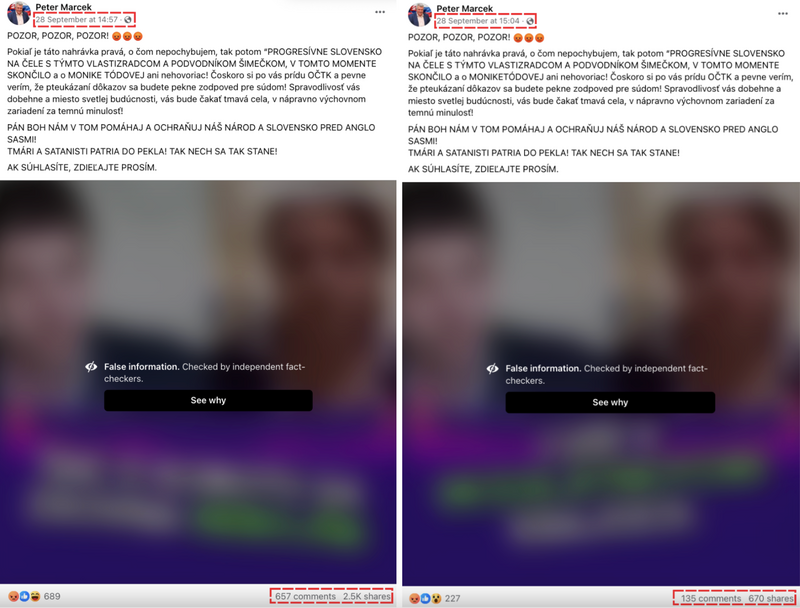

Marček’s posts with the deepfake video. Source: Facebook/Peter Marček

On September 28, Marček shared the fake video twice on his two personal Facebook accounts. He posted it around three o’clock in the afternoon. AFP fact checkers began verifying it at 3:45 p.m. An explanatory text that said that the video of the conversation between the journalist and the PS leader was not authentic was published shortly before 10 pm. “In this case, we were alerted to these particular posts by sources within the expert community. We weren’t alerted by Meta or anything like that,” explained disinformation expert Barca, who was tasked with verifying the deepfake video from Marček’s profile.

According to statistics, the video gained approximately 50,000 views through Marcek’s posts, though it garnered fewer than 5,000interactions, of which only 3,236 were reshares. According to Barca, the fake video had more than 100,000 views in total. “Another 30,000 views [from] one viral post on Instagram,” Barca added.

Marček also shared the video on his Telegram channel, but only a minimal number of users saw it there.

Although Facebook flagged the fake video as fake news the same day, following the AFP analysis, at time of the publication of this article, the former MP has still not removed it from his profile.

When asked by the ICJK where he obtained the fake video, Peter Marček did not reply: “I receive about 300 different messages, e-mails (…) I choose what I like and post it or write a status about it.” We asked the ex-MP whether he considers the deepfake recording credible, given that he still has it on his profile, and whether he does not see it as problematic that he shared a fake video during the pre-election moratorium. However, we did not receive a response.

This was not the first time Marček shared false information on his profile. Before the elections, he circulated a video claiming to show US soldiers near Bratislava’s SNP Bridge. “Intimidation of BA residents before the elections to the National Assembly of the Slovak Republic? Or did they go to protect the US embassy?” he asked in his post. The post was also examined by AFP disinformation experts. According to their analysis, these were Italian soldiers helping to protect Slovak airspace.

Personal accounts played a key role

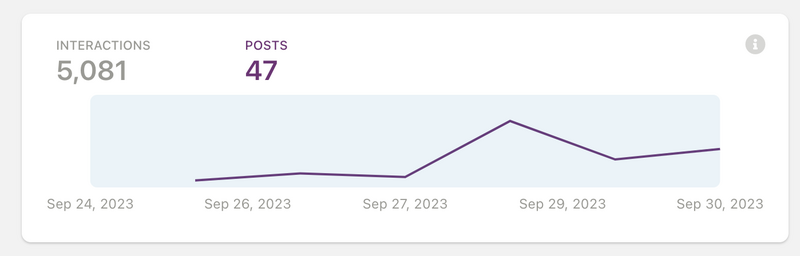

A CrowdTangle tool analysis confirms that the fake video was spread mainly through personal accounts. In the last week of September, only 47 posts containing the keywords “Tódová” or “wiretap” appeared on Facebook pages, public groups, and verified profiles. Only 20 of those actually referred to the fake video, and did so either by re-sharing it (13 posts) or debunking it (8 posts).

Source: Meta/CrowdTangle

This may be because the video was distributed through unverified personal profiles, which CrowdTangle does not process or keep statistics on their content. Another possible explanation is that Facebook pages and groups deleted it after users posted about the inauthenticity of the footage or urged people not to share the fake video in question.

Tracking and debunking can be challenging

Finding fake or malicious content on Facebook or Telegram is not easy. Analytics tools on social networks mainly work on a keyword basis and, in the case of Facebook, only cover so-called Pages, public groups, and verified profiles. Meanwhile, disinformation is often spread through personal and anonymous profiles. Moreover, search engines may not pick up posts containing only video or audio.

Josef Šlerka, data analyst at investigace.cz, said that the problem of content analysis also exists on Telegram. “It is currently not possible to search for video content on Telegram, as nothing can be searched there at all.” A different analytical tool is needed for that. “Technically, it’s complicated and expensive,” he explained.

Another problem is the verification of artificial intelligence (AI)-generated content. Although almost anyone can create this content, detecting the use of AI is not so easy. “To my knowledge, there are no affordable and reliable methods for detecting AI-generated audio recordings,” points out AFP fact-checker Robert Barca. He therefore reached out to experts in technology, social platforms, and hybrid threats to provide expert opinions when analyzing the fake conversation between journalist Tódová and politician Šimečka. “In the text, I also pointed out specific passages and times in the recording that showed signs of artificial generation based on unnatural intonation and melody of speech,” he added.

Michael Colborne, a journalist and analyst for the Bellingcat project, takes a similar view. “Some of the existing tools online for detecting AI images, for example, have led people to incorrectly draw conclusions about the veracity of images because of the unreliability of these tools and peoples’ lack of understanding of AI” he explained.

“There’s an irony as well: People can, and are, reject things as, ‘oh, that’s AI,’ with no proof that they’re AI,” Colborne says. He believes such claims have the potential to further disrupt democratic discourse. “’Everything can be fake’, basically, which despite the advances of tech, isn’t quite the case,” he added.

The video has attracted the attention of not only experts but also state institutions dealing with disinformation and hybrid threats. Among the first to react were the police, who, just an hour after Marček’s post, published a warning about fake videos and recordings created with the help of artificial intelligence.

The fake video was also examined by the Interior Ministry’s Center for Combating Hybrid Threats, which, in its analysis, found deepfake content to potentially have a significant impact on the credibility of the elections. Special unit analysts also identified Telegram as a source of further dissemination. According to the report, “the video began spreading on the Telegram app in several Slovak groups and channels, from where it was subsequently shared on TikTok, Facebook, and YouTube.” The center estimates the cumulative number of re-shares at more than 5,000. It was also disseminated via chain email messages.

The Slovak Media Services Board also intervened in cooperation with the center. It alerted the relevant platforms, such as Facebook, YouTube and TikTok. They subsequently blocked or deleted the problematic content. The problem, however, remains the minimally regulated Telegram, where the video is still available.

Cover illustration: kremlin.ru, YouTube/StefanHarabin, TASR – Marko Erd

Karin Kőváry Sólymos is a Slovak journalist at the Investigative Center of Ján Kuciak. Previously, she was an editor and presenter at the Hungarian channel of the Slovak public service media. During her university years, she was an analyst for the only fact-checking portal in Slovakia. She was a recipient of the Novinarska Cena 2022.